Before we dive in: I share practical insights like this weekly. Join developers and founders getting my newsletter with real solutions to engineering and business challenges.

I spent a bit of time rebuilding a small Go application recently and I wanted to implement an easy test, build, deploy pipeline to a Kubernetes instance I have on Digital Ocean. This took longer than I was expecting, so I will detail what I did in this post both for whoever needs it as well as my future self.

Note: this is a very simple approach, it just tests, builds and deploys and effectively hopes for the best. There is no blue/green deployment or anything else that fancy.

Throughout this post it is assumed that the application name is "appname" and the namespace is also "appname".

End State

I wanted the following to happen when I pushed code to the master branch:

- Tests run against the application

- The application was built

- The Docker image was built

- Docker image pushed to private docker repository and tagged with a version number set in code

- Kubernetes manifests updated using the latest version

- New containers created using the new version

- Monitored kubernetes for success/failure

This involves a few pieces behind the scenes. At the application level, we need two main files: Dockerfile and Makefile.

Dockerfile

This is the default Dockerfile I use for Go applications. It's super light and secure, and the images are tiny.

FROM golang:alpine as builder

ENV GO111MODULE on

RUN mkdir -p /build

WORKDIR /build

COPY go.mod go.sum ./

RUN apk add git && go mod download

ADD . .

RUN mkdir -p /app

# If you have static assets like templates

COPY tpl /app/tpl

RUN CGO_ENABLED=0 GOOS=linux go build -a -installsuffix cgo -ldflags '-extldflags "-static"' -o /app/appname .

# FROM alpine

FROM gcr.io/distroless/base

COPY --from=builder /app /app

WORKDIR /app

EXPOSE 80 8080

CMD ["./appname"]Makefile

The Makefile contains quite a few different pieces but I'll drop in only the relevant ones.

The version is kept in the codebase in the following line:

var version = "0.1"Which allows the grep and sed commands to work correctly. The Makefile looks like this:

version=$(shell grep "version" main.go | sed 's/.*"\(.*\)".*/\1/')

version:

echo ${version}

publish:

docker build --rm -t username/appname:${version} . && docker push username/appname:${version}

update:

VERSION=${version} envsubst < k8s/deployment.yml | kubectl apply -n appname -f -

kubectl apply -f k8s/service.yml -f k8s/ingress.yml -n appnameNow we can use make version to see the version, make publish to build and publish a docker image with the latest version and make update to deploy the latest version to the Kubernetes cluster.

Requirements

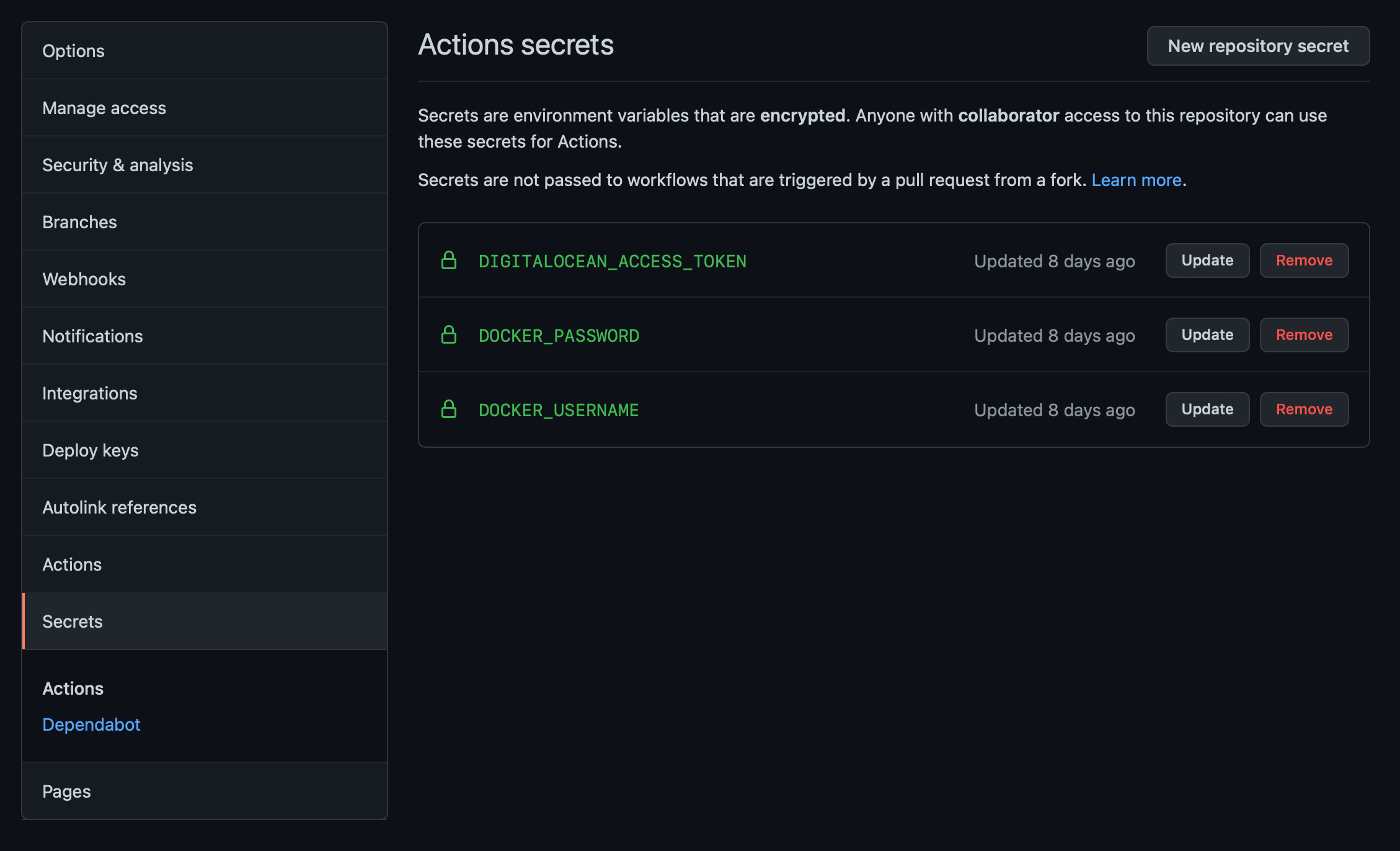

A lot is required in the background to make this work. There are secrets to be stored in Github, and then secrets that I keep in Kubernetes. The Github secrets are used for Docker tasks, and the Kubernetes secrets are used for environment variables.

The Github secrets are as follows and pretty self-explanatory:

The Digital Ocean access token can be found in the API section in the dashboard. The Docker username and password is what you use to log in to Dockerhub (where I keep my images).

The following is used to create the Kubernetes secrets:

kubectl create secret docker-registry docker-registry -n appname \

--docker-username=DOCKER_USERNAME \

--docker-password=DOCKER_PASSWORD \

--docker-email=DOCKER_EMAIL

kubectl create secret generic newsbias-db-details -n appname \

--from-literal="username=digital-ocean-db-user" \

--from-literal="password=digital-ocean-db-password" \

--from-literal="host=digital-ocean-db-host" \

--from-literal="port=digital-ocean-db-port"

kubectl create secret generic prod-route53-credentials-secret -n cert-manager \

--from-literal="secret-access-key=SECRET_KEY"The first secret is for Kubernetes to be able to pull down the images from the private Docker registry.

The second secret is environment variable related - these are passed in to the application as environment variables. This is really cool, because you have can have multiple environments (dev, staging, prod) and just use different namespaces to store the relevant variables. No changes to pipelines.

The third secret is used by the Let's Encrypt cert manager to automatically configure the DNS, where my DNS is on AWS. I've had too much hassle using web-based validation to just skip it. It's mainly due to the way I use the load balancers at Digital Ocean, as they pass the traffic through and this causes trouble. The DNS validation is much more preferred now.

Manifests

Provisioning

There are a few manifests that I use to spin up a new Kubernetes cluster which I thought would be handy for anyone doing the same.

They are listed in order of application. The pre-requisite for this step is to have a Kubernetes cluster created.

namespace.yml

apiVersion: v1

kind: Namespace

metadata:

name: appnameingress.yml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: newsbias-ingress

namespace: appname

annotations:

cert-manager.io/cluster-issuer: letsencrypt-prod

kubernetes.io/ingress.class: nginx

nginx.ingress.kubernetes.io/server-snippet: |-

location /metrics { return 404; }

location /debug { return 404; }

spec:

tls:

- hosts:

- appname.com

secretName: appname-tls

rules:

- host: appname.com

http:

paths:

- backend:

serviceName: appname

servicePort: 80ingress-controller.yml

controller:

image:

repository: k8s.gcr.io/ingress-nginx/controller

tag: "v0.43.0"

containerPort:

http: 80

https: 443

# Will add custom configuration options to Nginx https://kubernetes.github.io/ingress-nginx/user-guide/nginx-configuration/configmap/

config:

compute-full-forwarded-for: "true"

use-forwarded-headers: "true"

use-proxy-protocol: "true"

## Election ID to use for status update

electionID: ingress-controller-leader

ingressClass: nginx

kind: DaemonSet

# Define requests resources to avoid probe issues due to CPU utilization in busy nodes

# ref: https://github.com/kubernetes/ingress-nginx/issues/4735#issuecomment-551204903

# Ideally, there should be no limits.

# https://engineering.indeedblog.com/blog/2019/12/cpu-throttling-regression-fix/

resources:

limits:

cpu: 100m

memory: 90Mi

requests:

cpu: 100m

memory: 90Mi

service:

enabled: true

annotations:

# https://developers.digitalocean.com/documentation/v2/#load-balancers

# https://www.digitalocean.com/docs/kubernetes/how-to/configure-load-balancers/

service.beta.kubernetes.io/do-loadbalancer-name: "KubernetesLoadBalancer"

service.beta.kubernetes.io/do-loadbalancer-hostname: lb.thenewsbias.com

service.beta.kubernetes.io/do-loadbalancer-enable-proxy-protocol: "true"

service.beta.kubernetes.io/do-loadbalancer-algorithm: "round_robin"

service.beta.kubernetes.io/do-loadbalancer-healthcheck-port: "80"

service.beta.kubernetes.io/do-loadbalancer-healthcheck-protocol: "http"

service.beta.kubernetes.io/do-loadbalancer-healthcheck-path: "/healthz"

service.beta.kubernetes.io/do-loadbalancer-protocol: "tcp"

# service.beta.kubernetes.io/do-loadbalancer-http-ports: "80"

# service.beta.kubernetes.io/do-loadbalancer-tls-ports: "443"

service.beta.kubernetes.io/do-loadbalancer-tls-passthrough: "true"

# lb-small, lb-medium, lb-large

service.beta.kubernetes.io/do-loadbalancer-size-slug: "lb-small"

# loadBalancerIP: ""

loadBalancerSourceRanges: []

externalTrafficPolicy: "Local"

enableHttp: true

enableHttps: true

# specifies the health check node port (numeric port number) for the service. If healthCheckNodePort isn’t specified,

# the service controller allocates a port from your cluster’s NodePort range.

# Ref: https://kubernetes.io/docs/tasks/access-application-cluster/create-external-load-balancer/#preserving-the-client-source-ip

# healthCheckNodePort: 0

ports:

http: 80

https: 443

targetPorts:

http: http

https: https

type: LoadBalancer

nodePorts:

http: "30021"

https: "30248"

tcp: {}

udp: {}

admissionWebhooks:

enabled: true

# failurePolicy: Fail

failurePolicy: Ignore

timeoutSeconds: 10Cert manager

Install the cert manager using the following command:

kubectl apply -f https://github.com/jetstack/cert-manager/releases/download/v1.6.1/cert-manager.yamlcluster-issuers.yml

apiVersion: cert-manager.io/v1

kind: ClusterIssuer

metadata:

name: letsencrypt-prod

namespace: kube-system

spec:

acme:

# The ACME server URL

server: https://acme-v02.api.letsencrypt.org/directory

# Email address used for ACME registration

email: email@domain.com

# Name of a secret used to store the ACME account private key

privateKeySecretRef:

name: letsencrypt-prod

solvers:

- dns01:

route53:

accessKeyID: ACCESS_KEY_ID

region: eu-east-1

role: ""

secretAccessKeySecretRef:

key: secret-access-key

name: prod-route53-credentials-secret

---

apiVersion: cert-manager.io/v1

kind: ClusterIssuer

metadata:

name: letsencrypt-staging

namespace: kube-system

spec:

acme:

# The ACME server URL

server: https://acme-staging-v02.api.letsencrypt.org/directory

# Email address used for ACME registration

email: email@domain.com

# Name of a secret used to store the ACME account private key

privateKeySecretRef:

name: letsencrypt-staging

solvers:

- dns01:

route53:

accessKeyID: ACCESS_KEY_ID

region: eu-east-1

role: ""

secretAccessKeySecretRef:

key: secret-access-key

name: prod-route53-credentials-secretcert-manager.yml

installCRDs: true

resources:

requests:

cpu: 50m

memory: 200Mi

limits:

cpu: 50m

memory: 400Mi

webhook:

resources:

requests:

cpu: 10m

memory: 32Mi

limits:

cpu: 10m

memory: 32Mi

cainjector:

resources:

requests:

cpu: 50m

memory: 200Mi

limits:

cpu: 50m

memory: 400MiThere we go - new cluster spun up and ready to get the services and deployments done.

Application

There are only two scripts for the application - service and deployment.

service.yml

apiVersion: v1

kind: Service

metadata:

name: appname

namespace: appname

spec:

selector:

app: appname

type: ClusterIP

ports:

- port: 80

targetPort: 8080

protocol: TCP

name: httpdeployment.yml

apiVersion: apps/v1

kind: Deployment

metadata:

name: appname

namespace: appname

spec:

replicas: 1

selector:

matchLabels:

app: appname

template:

metadata:

labels:

app: appname

spec:

imagePullSecrets:

- name: docker-registry

containers:

- name: appname

image: username/appname:$VERSION

env:

- name: DB_NAME

value: "appname-db-name"

- name: DB_USERNAME

valueFrom:

secretKeyRef:

name: appname-db-details

key: username

- name: DB_PASSWORD

valueFrom:

secretKeyRef:

name: appname-db-details

key: password

- name: DB_HOST

valueFrom:

secretKeyRef:

name: appname-db-details

key: host

- name: DB_PORT

valueFrom:

secretKeyRef:

name: appname-db-details

key: port

- name: STATIC_ENVIRONMENT_VARIABLE

value: "example"

imagePullPolicy: Always

ports:

- containerPort: 8080

resources:

limits:

cpu: 500m

memory: 1Gi

requests:

cpu: 100m

memory: 500MiIf you take a look at the image line, you see $VERSION being used. This is where the magic happens.

Let's bring the Makefile line back:

VERSION=${version} envsubst < k8s/deployment.yml | kubectl apply -n newsbias -f -The version is grepped from the file, and then using envsubst it is applied to the file, replacing $VERSION with whatever is in the environment variable.

Github Actions

The final task was to put this all together in a Github action.

# Change this to whenever you want this to run

on: [push, pull_request]

name: Workflow

jobs:

test:

strategy:

matrix:

go-version: [1.17.x]

os: [ubuntu-latest]

runs-on: ${{ matrix.os }}

steps:

- name: Install Go

uses: actions/setup-go@v2

with:

go-version: ${{ matrix.go-version }}

- name: Checkout code

uses: actions/checkout@v2

- name: Test

run: go test

- name: Coverage

run: go test -cover

- name: Build

run: go build

deploy:

strategy:

matrix:

go-version: [ 1.17.x ]

os: [ ubuntu-latest ]

runs-on: ${{ matrix.os }}

steps:

- name: Install Go

uses: actions/setup-go@v2

with:

go-version: ${{ matrix.go-version }}

- name: Checkout code

uses: actions/checkout@v2

- name: Log in to Docker Hub

uses: docker/login-action@f054a8b539a109f9f41c372932f1ae047eff08c9

with:

username: ${{ secrets.DOCKER_USERNAME }}

password: ${{ secrets.DOCKER_PASSWORD }}

- name: Docker

run: make publish

- name: Checkout code

uses: actions/checkout@v2

- name: Install doctl

uses: digitalocean/action-doctl@v2

with:

token: ${{ secrets.DIGITALOCEAN_ACCESS_TOKEN }}

- name: Save DigitalOcean kubeconfig with short-lived credentials

run: doctl kubernetes cluster kubeconfig save --expiry-seconds 600 digital-ocean-kubernetes-cluster-name

- name: Deploy to DigitalOcean Kubernetes

run: make update

- name: Verify deployment

run: kubectl rollout status deployment/appname -n appnameThe first part is used just for testing, soon I'll add a line to upload the code coverage to CodeCov which I've found to be a great service.

The second part, deploy, is where the magic happens. The steps are above, but basically:

- Log in to Docker

- Build and publish the image using the version

- Checkout the code (so we can run the kubernetes manifests)

- Install doctl to get Kubernetes access temporarily for a specific cluster (this can also be in a secret to be fully generic)

- Deploy using

make update - Verify the deployment

The end result is code is pushed, built and deployed automtically.

Conclusion

These are the scripts and flows I use to get a really simple CI/CD pipeline set up where everything is secure and secrets are secret. I plan to create a public Github repo with these scripts as well as ones for Bitbucket and other providers.

If you found this post interesting, subscribe to get posts delivered to your inbox.